Reading Notes

A running log of my casual notes, thoughts, and summaries on papers in performance engineering, MLSys, and HPC.

This is a living document containing my notes on various papers.

ProfileMe ‘97

Compaq's Alpha 21264 provides two performance counters that support privilege-mode qualification and interrupt generation on counter overflow.

| Feature | ProfileMe | NVIDIA CUPTI PC Sampling |

|---|---|---|

| Company | Digital Equipment Corp. (DEC) | NVIDIA |

| Target Architecture | Out-of-Order Superscalar CPUs | GPUs (SIMT architecture) |

| Chips | Compaq Alpha 21264 processor | NVIDIA GPUs (e.g., Ampere, Hopper, Blackwell) |

| Core Goal | Identify instruction-level bottlenecks and accurately attribute events to specific instructions | Identify warp-level stall bottlenecks and attribute them to the responsible code |

| Sampling Philosophy | “Instruction Biography”: Follow a single, randomly chosen instruction through its entire lifecycle. | “Warp Snapshot”: Periodically check the current state and stall reason of all active warps. |

| Sampling Trigger | Instruction-Triggered: A hardware counter randomly selects an instruction to profile. | Time-Triggered: A periodic clock cycle interval initiates a sample collection across the GPU. |

| Data Collection Model | Longitudinal & Deep: Gathers a rich, multi-faceted record for one instruction’s complete execution. | Cross-Sectional & Broad: Gathers a focused record from many warps at a single moment in time. |

| Data Richness / Sample | Very High: Includes PC, multiple pipeline stage latencies, cache miss data, effective addresses, completion status, etc. | Focused: Primarily includes the PC and a single, dominant stall reason for a warp. |

| Unit of Analysis | A single instruction. | A warp (a group of 32 threads executing in lockstep). |

| Hardware Requirement | Requires new, specific hardware modifications to the CPU core, such as dedicated counters and registers. | Uses existing, built-in Performance Monitoring Units (PMUs) that are standard on modern GPUs. |

| Key Innovation | Paired Sampling: Simultaneously profiles two instructions to measure concurrency and interaction on an out-of-order CPU. | Scalability: Provides a scalable method to analyze performance across thousands of parallel threads. |

- Same Spirit: Both ProfileMe and CUPTI PC Sampling are designed to move beyond simple event counting to provide developers with precise, actionable data that links performance bottlenecks directly to the source code.

- Different Worlds: ProfileMe was a pioneering proposal for the complex, single-thread-focused world of out-of-order CPUs, requiring deep analysis of one instruction’s life. CUPTI PC Sampling is the practical, modern implementation of that spirit, adapted for the massively parallel world of GPUs, where understanding the collective behavior of thousands of threads is key.

gprof ‘82

gprof measures the cost with statistical sampling, determining each function's total self-time by periodically recording which function is executing. Next, it distributes the cost with proportional propagation, where a function's measured self-time is divided among its callers based on call counts to ensure fair attribution.

A critical aspect of gprof's design is a simplifying assumption it makes to maintain low overhead: it assumes that every call to a particular function takes the same amount of time. The profiler calculates a function's total self-time from all its executions and then effectively computes an "average cost per call." This average cost is what gets distributed to its callers.

However, this model has limitations, as the paper itself acknowledges. Consider a function like matrix_multiply(size). If one part of the program calls it with a small 4x4 matrix, while another calls it with a massive 1000x1000 matrix, the actual costs are vastly different. gprof will average these costs, potentially over-attributing time to the cheap call and under-attributing it to the expensive one. This trade-off between precision and low performance impact is a fundamental characteristic of gprof's design.

DSL and DSA ‘20

- 2005-2017: The Multicore Era

- What’s the opportunity?

- SW-centric

- Modern scripting languages are interpreted, dynamically-typed and encourage reuse

- Efficient for programmers but not for execution

- HW-centric

- Only path left is Domain Specific Architectures

- Just do a few tasks, but extremely well

- Combination

- Domain Specific Languages & Architectures

- SW-centric

The Hardware Lottery ‘21

Hooker introduces the term “hardware lottery” to describe a phenomenon where research ideas succeed not because they are inherently superior, but because they happen to be compatible with available hardware and software at the time. This creates noise in the scientific process, potentially causing promising research directions to be overlooked or delayed for decades due to lack of suitable tools, while suboptimal ideas that align with existing infrastructure flourish.

To avoid future hardware lotteries, the key lies in reducing the cost and complexity of exploring different algorithm-software-hardware combinations.

Hardware Challenges:

- Cost as a bottleneck: Developing new-generation chips requires enormous investment (tens of millions of dollars and several years), limiting hardware developers’ willingness to take risks.

- Emerging directions: While reconfigurable hardware like FPGAs offers flexibility, programming them remains extremely challenging. More speculative research areas—neuromorphic computing, optical computing, and quantum computing—require sustained, large-scale funding from both public and private sectors, yet current investment remains insufficient.

Software Revolution:

- Bridging the cognitive gap: ML researchers currently struggle to quantify the interaction costs between their algorithms and hardware due to the lack of accessible benchmarking tools.

- Elevating software abstractions: A viable medium-term goal is addressing these challenges through software innovation. Developing domain-specific languages (DSLs) and auto-tuning tools would allow developers to focus on algorithmic intent without concerning themselves with low-level hardware implementation details, achieving cross-hardware portability and efficiency.

- Optimizing the software stack: During the Moore’s Law era, software efficiency was neglected because hardware performance gains could compensate for software inefficiencies. Today, there exists tremendous opportunity and potential in optimizing the software stack.

The Bitter Lesson ‘19

The most powerful advances in AI come not from encoding human knowledge, but from leveraging massive computational power through general methods like search and learning.

The “Bitter Lesson” refers to a recurring pattern in AI history:

- Short-term Temptation: Researchers often try to embed their own understanding and domain-specific knowledge into AI systems.

- Short-term Success: These efforts yield initial improvements, giving a sense of progress.

- Long-term Limitations: Over time, however, such hand-crafted approaches hit a ceiling and fail to scale.

- Breakthrough via Computation: Ultimately, breakthroughs emerge from approaches that exploit large-scale computation—often through brute-force search or learning—rather than human-designed strategies.

Historical Examples:

- Chess: The defeat of chess champion Garry Kasparov by Deep Blue in 1997 was achieved not by mimicking human thought, but by deep, brute-force search.

- Go: Early Go programs focused on human-like strategies, but real progress came with AlphaGo, which combined massive computation with self-play learning.

- Speech Recognition: Initial systems relied on linguistic knowledge, but statistical models and later deep learning—requiring less human input—proved far superior.

- Computer Vision: Hand-crafted features gave way to deep neural networks, which, with enough data and computation, learned far more effective representations.

Underlying Reason:

This lesson is driven by the exponential growth in computational power (a la Moore’s Law). Methods that scale with computation—search and learning—consistently outperform those limited by human knowledge.

What Should We Learn?

- Embrace General Methods: Prioritize approaches that harness computation and can scale, such as search and learning.

- Avoid Hard-coding Human Knowledge: The world’s complexity resists simple, hand-crafted representations. Instead, build systems that can autonomously discover and model this complexity.

In essence, the future of AI lies in creating systems that learn and discover as we do, not ones that merely reflect what we already know.

3Ps of PP ‘19

A successful parallel programming model should have three broad characteristics (known as the three Ps): productivity, performance, and portability.

Torch ‘02

This paper reminds me to have a look at the history of PyTorch.

Torch’s journey spans 20 + years: the C‑based Torch (2002) laid the modular groundwork, Torch7 (2011) rebuilt it in LuaJIT for GPU speed, and frustration with Lua’s niche led the same researchers to ship PyTorch 0.1 in January 2017—bringing define‑by‑run automatic differentiation to Python. PyTorch 1.0 (Dec 2018) added TorchScript and ONNX export so the research‑friendly API could ship to production, and in 2022 Meta transferred the project to a vendor‑neutral PyTorch Foundation. The compiler‑driven PyTorch 2.0 series (March 2023 →) layers torch.compile and TorchInductor on top of eager mode for dramatic performance gains without changing user code.

Historical evolution at a glance

| Era (Years) | Library | Key milestones & capabilities | Representative articles |

|---|---|---|---|

|

Early Torch 2002 – 2005 |

Torch (C + scripting) | Collobert & Bengio introduce a BSD‑licensed, modular ML library with BLAS/ATLAS kernels (publications.idiap.ch) | “Torch: A Modular Machine Learning Software Library” (2002) (publications.idiap.ch) |

|

Torch7 2011 – 2017 |

Torch7 (LuaJIT) | Full LuaJIT rewrite; CUDA tensor core; adopted at Facebook AI & DeepMind (ronan.collobert.com, en.wikipedia.org) | Collobert et al., “Torch7: A MATLAB‑like Environment for ML” (2011) (ronan.collobert.com) |

|

PyTorch 0.x Preview Jan 2017 – mid‑2017 |

PyTorch 0.1–0.2 | Define‑by‑run dynamic graphs, CUDA tensors, eager autograd; fast researcher uptake (github.com, pytorch.org) | GitHub release timeline (0.1) (github.com); “PyTorch, a Year In” (2018‑01‑19) (pytorch.org) |

|

PyTorch 1.x Growth 2018 – 2021 |

PyTorch 1.0–1.13 | 1.0 stable (Dec 2018) merges Caffe2 runtime, adds TorchScript compiler & ONNX export; ecosystem libraries and cloud support blossom (engineering.fb.com, developers.facebook.com, pytorch.org) | FB Eng. Blog “Developer Ecosystem Expands, 1.0 Stable” (2018‑12‑07) (engineering.fb.com); FB Dev Blog “Announcing PyTorch 1.0” (2018‑05‑02) (developers.facebook.com) |

|

Governance shift Sept 2022 |

PyTorch Foundation | Meta donates project to new PyTorch Foundation under Linux Foundation; multi‑vendor board (AMD, AWS, Google, Microsoft, Nvidia) (linuxfoundation.org, axios.com) | Linux Foundation press release (2022‑09‑12) (linuxfoundation.org); Axios coverage “Meta moves PyTorch to LF” (2022‑09‑12) (axios.com) |

|

PyTorch 2.x Compiler era Mar 2023 → |

PyTorch 2.0–2.6 |

torch.compile captures eager code, feeds TorchDynamo + Inductor for 30‑200 % speedups; dynamic shapes & backend plug‑ins keep expanding (pytorch.org, pytorch.org) |

PyTorch Blog “PyTorch 2.0: Next‑generation Release” (2023‑03‑15) (pytorch.org); “PyTorch 2.x Overview” docs (pytorch.org) |

EasyView ‘24

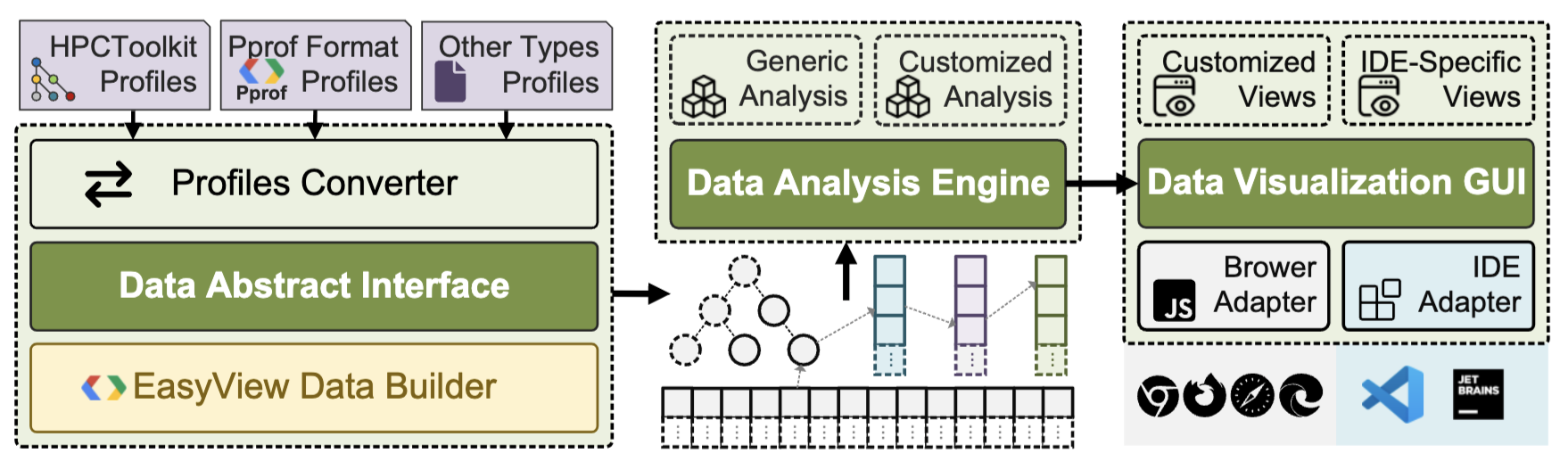

Traditional profiling tools are often separate from IDEs, creating a steep learning curve and forcing developers to switch contexts between coding and analysis, which is inefficient. While some IDEs have attempted to integrate profilers, these solutions typically offer limited support, basic functionality, and poor performance with large analysis files.

EASYVIEW addresses these pain points by bringing performance analysis directly into the coding environment. Its core advantages include:

-

Generic by Design: EASYVIEW uses a common data abstraction interface to support a wide range of mainstream profilers, including PProf and HPCToolkit. This frees developers from needing to learn multiple tools for different analysis needs.

-

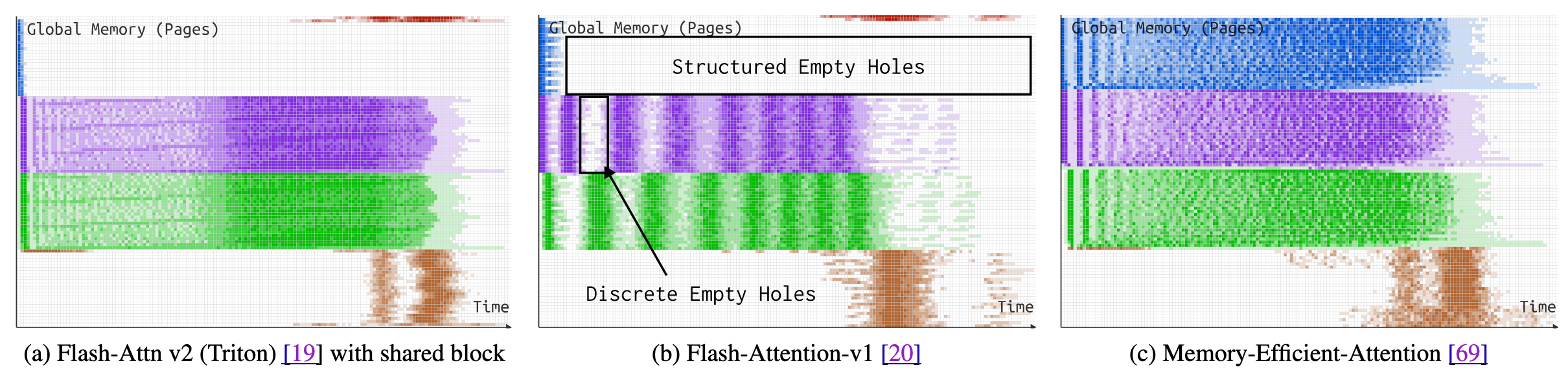

Deep Performance Insights: EASYVIEW provides innovative, advanced views beyond traditional flame graphs.

-

Differential View: It clearly quantifies performance changes between two runs (e.g., before and after an optimization). It uses tags like

[+],[-],[A], and[D]to intuitively display performance gains, regressions, new calls, and deleted calls. - Correlated View: It can link multiple profiles to uncover deep insights.

-

Differential View: It clearly quantifies performance changes between two runs (e.g., before and after an optimization). It uses tags like

-

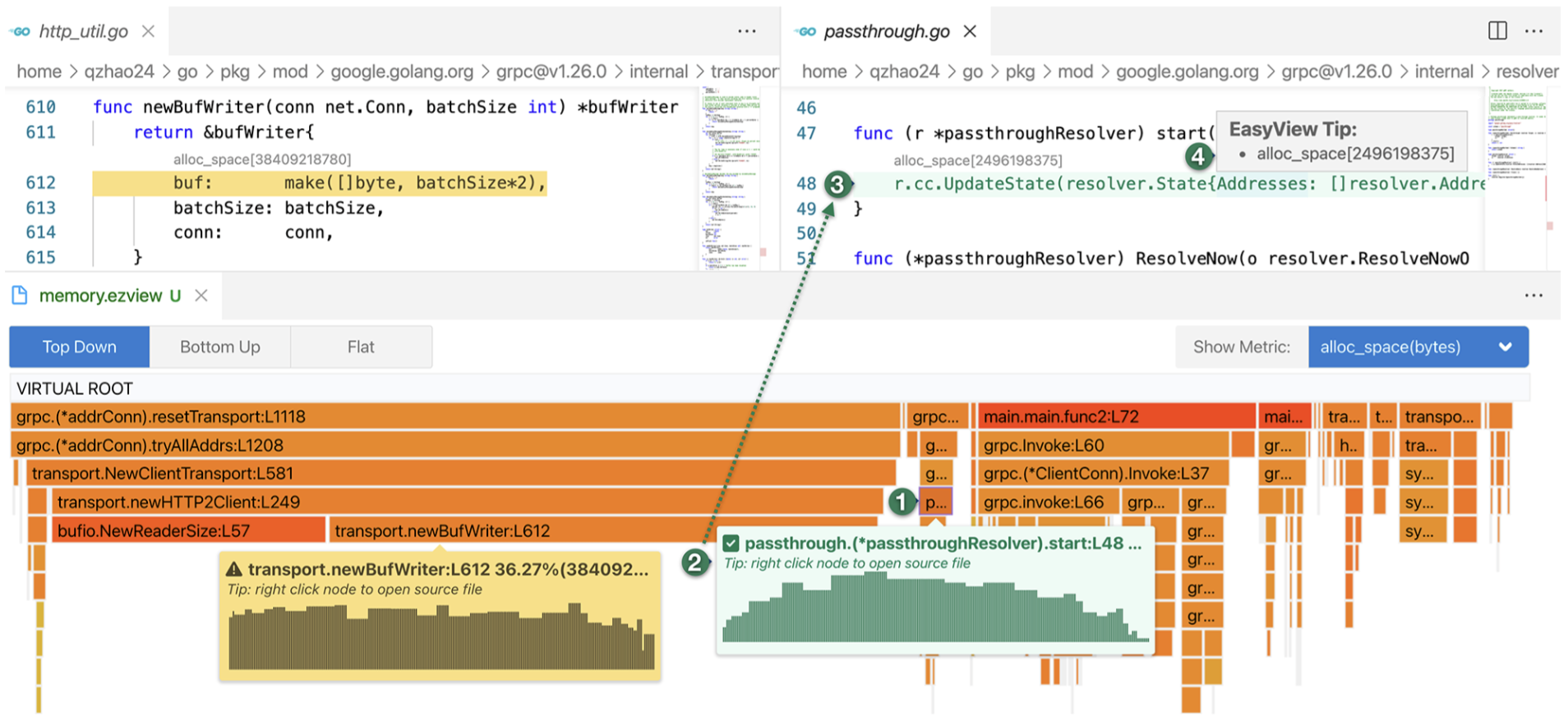

Superior User Experience: As a VSCode extension, EASYVIEW tightly integrates performance data with source code.

- In-IDE Analysis: Users can click directly on a flame graph to jump to the corresponding line of code and view detailed metrics in hovers and code lenses, all without leaving the IDE. Figure 2 perfectly illustrates this seamless interactive experience.

- Efficient and Smooth: The tool is optimized to handle large performance profiles (up to 1GB) with high efficiency, ensuring a smooth and responsive analysis experience that is much faster than comparable tools.

Deep learning with COTS HPC systems ‘15

The paper demonstrated that large-scale deep neural networks could be efficiently trained using clusters of commodity hardware—specifically, GPU servers interconnected with high-speed networks. Prior to this work, training billion-parameter models typically required massive cloud infrastructure and thousands of CPU cores. The authors showed that by leveraging GPUs—which offer far greater arithmetic throughput than standard CPUs—along with optimized distributed computing strategies, it was possible to achieve better performance using far fewer machines.

Neutrino ‘25

| Dimension | Deep Context | NEUTRINO |

|---|---|---|

| Instrumentation / Data Pipeline | Uses a shim layer (DLMonitor) to intercept deep‑learning framework calls, CUPTI/RocTracer GPU events and CPU Perf/PAPI counters, then co‑aggregates them in one profile database. | Injects “probes” (snippet + trace‑point + map) directly into GPU assembly at runtime; a hook driver and probe engine reassemble the code and stream results to user space. |

| Context‑breadth reconstructed | Rebuilds the full call‑path—from Python ➜ DL operator ➜ C++ ➜ GPU kernel—across PyTorch & JAX, NVIDIA & AMD GPUs, and even CPUs, so one trace covers the whole stack. | Stays inside a single GPU kernel but reaches instruction‑level detail; nevertheless works on both NVIDIA & AMD back‑ends. |

| Automation & Guidance | Ships a pattern‑matching performance analyser that auto‑suggests fixes (e.g., fuse ops, change data layout). | Provides raw measurements; interpretation is left to the user. The paper explicitly says leveraging those traces “is not our primary goal.” |

| Visual Experience | Integrated VS Code panel with hierarchical flame‑paths and metric drill‑downs. | Introduces the DMAT plot that overlays memory‑pressure, time and thread density in one heat‑map for instant visual insight. |

| Programmability / Extensibility | Users extend analysis by writing new pattern‑rules over the stored context/metrics; instrumentation itself is fixed (handled by DLMonitor). | A Python DSL or TOML lets you write custom probes, combine them cooperatively and even build ring‑buffer maps—akin to eBPF for GPUs. |

| Cross‑Platform Coverage | One binary profiles PyTorch or JAX on NVIDIA, AMD, x86 or ARM with the same UI. | Currently supports CUDA 12 + ROCm/HIP on Linux for NVIDIA & AMD GPUs. |

| Profiling Overhead | Comparable to state‑of‑the‑art tools while cutting trace size dramatically. | Lightweight probes slow kernels only 1.04× on average and burn ≈ 4 extra registers; heavy DMAT probes are ~7×. |

| Safety / Verification | Relies on mature vendor APIs, so safety comes “for free” from CUPTI/RocTracer. | Includes a verifier that rejects probes touching original registers, flow‑control instructions or shared‑memory abuse, preventing crashes. |

How they fit together

- Deep Context tells you which part of an ML workload is misbehaving and why at a macro level—perfect for everyday model developers.

- NEUTRINO zooms into a single GPU kernel to expose how each instruction behaves—ideal for system researchers or kernel authors. In practice you would first run Deep Context to flag a suspicious kernel, then attach a NEUTRINO probe to dissect that kernel’s internals—turning two complementary lenses into one complete diagnostic workflow.

XSP ‘20

| Dimension | DeepContext | NEUTRINO | XSP |

|---|---|---|---|

| Instrumentation / Data Pipeline | Uses a shim layer (DLMonitor) to intercept deep-learning framework calls, CUPTI/RocTracer GPU events and CPU Perf/PAPI counters, then co-aggregates them in one profile database. | Injects “probes” (snippet + trace-point + map) directly into GPU assembly at runtime; a hook driver and probe engine reassemble the code and stream results to user space. | Leverages distributed tracing by combining outputs from existing tools: manual timing for model-level, framework-specific profilers for layer-level, and NVIDIA’s CUPTI for GPU kernel-level. |

| Context-breadth Reconstructed | Rebuilds the full call-path—from Python ➜ DL operator ➜ C++ ➜ GPU kernel—across PyTorch & JAX, NVIDIA & AMD GPUs, and even CPUs, so one trace covers the whole stack. | Stays inside a single GPU kernel but reaches instruction-level detail; it works on both NVIDIA & AMD back-ends. | Correlates events across the model, layer, and GPU kernel levels by building an interval tree from span timestamps to reconstruct parent-child relationships. |

| Automation & Guidance | Ships a pattern-matching performance analyzer that auto-suggests fixes (e.g., fuse ops, change data layout). | Provides raw measurements; interpretation is left to the user. The paper explicitly says leveraging those traces “is not our primary goal.” | Features an automated analysis pipeline that performs 15 types of analyses, including generating tables and roofline plots to systematically characterize models. |

| Visual Experience | Integrated VS Code panel with hierarchical flame-paths and metric drill-downs. | Introduces the DMAT plot that overlays memory-pressure, time and thread density in one heat-map for instant visual insight. | Generates static plots and tables, such as throughput graphs, layer distribution pie charts, and roofline analysis plots. |

| Programmability / Extensibility | Users extend analysis by writing new pattern-rules over the stored context/metrics; instrumentation itself is fixed (handled by DLMonitor). | A Python DSL or TOML lets you write custom probes, combine them cooperatively and even build ring-buffer maps—akin to eBPF for GPUs. | Extensible design allows integrating new profiling tools by implementing a “tracer interface” and adding new analyses to the automated pipeline. |

| Cross-Platform Coverage | One binary profiles PyTorch or JAX on NVIDIA, AMD, x86 or ARM with the same UI. | Currently supports CUDA 12 + ROCm/HIP on Linux for NVIDIA & AMD GPUs. | The evaluated implementation is for TensorFlow and MXNet on NVIDIA GPUs (Turing, Volta, Pascal, Maxwell). |

| Profiling Overhead | Comparable to state-of-the-art tools while cutting trace size dramatically through runtime metric aggregation. | Lightweight probes slow kernels only 1.04× on average and burn ≈ 4 extra registers; heavy DMAT probes are ~7×. | Uses a “leveled experimentation” approach to measure and account for overhead from underlying profilers like CUPTI, which can be significant (over 100x for memory metrics). |

| Safety / Verification | Relies on mature vendor APIs, so safety comes “for free” from CUPTI/RocTracer. | Includes a verifier that rejects probes touching original registers, flow-control instructions or shared-memory, preventing crashes. | Inherits safety from the established, underlying profilers it uses at each stack level (e.g., framework profilers, CUPTI). |

How They Fit Together

These three tools represent different philosophies and target distinct, but complementary, levels of the deep learning performance optimization workflow.

-

XSP was a pioneering tool that demonstrated the value of an “across-stack” view. It correlates high-level framework operations with the GPU kernels they produce. It is best for systematic, off-line analysis to compare the performance characteristics of different models, frameworks, and hardware generations by identifying which layers or kernels are dominant.

-

DeepContext builds on this by providing a live, developer-centric experience. It is designed for everyday ML practitioners and application developers. Its key strength is reconstructing the entire call chain from the Python script down to the GPU kernel, across multiple frameworks and hardware vendors, and then automatically suggesting concrete optimizations within an IDE.

-

NEUTRINO is the most specialized tool, targeting GPU systems researchers, compiler developers, and authors of high-performance kernels. It offers unparalleled, fine-grained visibility inside a single GPU kernel, down to the assembly instruction level. It sacrifices the broad, full-stack view of DeepContext and XSP for programmable, in-depth kernel introspection.

In a practical workflow, a developer might first use DeepContext to identify a performance bottleneck and determine that a specific GPU kernel in their PyTorch model is the culprit. If the automated suggestions aren’t sufficient, they could then use NEUTRINO to attach custom probes to that specific kernel’s assembly code, analyzing its memory access patterns with a DMAT plot to understand the root cause of the inefficiency at the hardware level. XSP provides the foundational concepts and analyses that inform the design of both of these more modern tools.

Scalana ‘20 ‘24

There are two versions of Scalana, one is published in SC '20, the other is published in TPDS '24. Here is a table summarizing the comparison between the two versions of the paper.

| Comparison Dimension | Early Version (SC '20) | Current Version (TPDS '24) |

|---|---|---|

| Core Idea | Combines static program analysis with dynamic, sampling-based profiling to automatically identify root causes of scalability bottlenecks using a Program Performance Graph (PPG) and a backtracking algorithm. | The core idea remains the same, but the methodology, technical implementation, and evaluation are significantly deepened and expanded. |

| Analysis Scope | Primarily supports C and Fortran MPI programs based on source code analysis using LLVM. Extending to other models like OpenMP is noted as future work. | Significantly Expanded: • Supports direct analysis of executable binaries (using Dyninst). • Adds support for GPU programs (CUDA) and other parallel models like OpenMP and Pthreads. |

| Key Techniques & Depth | Proposes the fundamental framework of graph generation and backtracking detection. Uses LLVM for static analysis. | Technically Deepened: • Introduces optimizations for binary analysis like parallel analysis and granularity coarsening. • Details more refined, low-overhead data collection techniques like lightweight indirect call analysis. • Adds a formal complexity analysis of the backtracking algorithm. |

| Evaluation Scale | Evaluated on up to 2,048 processor cores. | Significantly Enlarged: • Evaluated on up to 16,384 processor cores. • Includes a scalability analysis of the tool itself against parameters like process count and sampling frequency. |

| Overhead & Performance | • Runtime Overhead: 1.73% on average for up to 2,048 processes. • Storage Cost: In the order of Kilobytes (KB) to Megabytes (MB), far less than tracing-based tools. | • Runtime Overhead: 5.65% on average for up to 16,384 processes (including I/O). • Storage Cost: In the order of Megabytes (MB) to Gigabytes (GB), while still being significantly less than tracing tools. |

| Optimization Impact | Achieved up to 11.11% performance improvement on 2,048 processes by fixing detected bottlenecks. | Optimization impact is more significant, achieving up to 33.01% performance improvement. |

| Tool Maturity & Usability | Provides a GUI named ScalAna-viewer and a clear command-line workflow. |

The tool is more mature: Provides the same GUI and adds explicit configuration guidance for key parameters like MaxLoopDepth and ImbThd, improving usability. |

| Case Studies | Evaluated applications including NPB, Zeus-MP, SST, and Nekbone. | Case studies are updated and more in-depth, evaluating new applications like LAMMPS (CPU/GPU), Sweep3D, HPL-GPU, and HPCG-GPU, replacing some of the earlier cases. |

Blog post: Scalana: A (Not-So) Deep Dive into its Codebase

Please pay attention to these two papers:

- Dynamic Statistical Profiling of Communication Activity in Distributed Applications '02

- Identifying The Root Causes Of Wait States In Large-Scale Parallel Applications '16

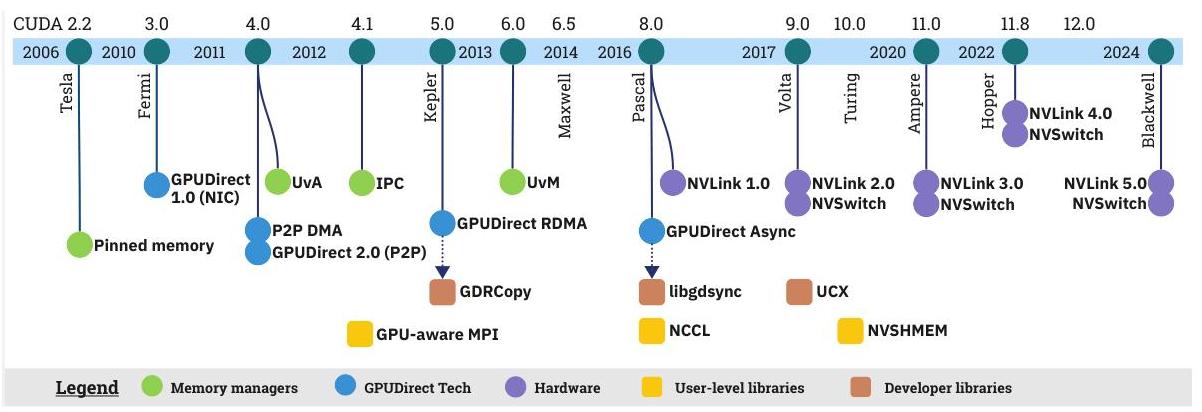

Landscape of GPU-Centric Communication ‘24

The GPUDirect family represents a series of innovations that progressively eliminate CPU involvement:

- GPUDirect 1.0: Enabled direct GPU-to-network interface card (NIC) communication

- GPUDirect 2.0 (Peer-to-Peer): Allowed direct GPU-to-GPU transfers within a node

- GPUDirect RDMA: Extended direct access to include remote direct memory access over InfiniBand networks

- GPUDirect Async: Introduced GPU-initiated communication capabilities

NVLink-C2C ‘23

NVLink‑C2C is NVIDIA’s ground‑referenced, single‑ended 40 Gbps/pin link that stitches Grace CPUs to Hopper/Blackwell GPUs (or to another Grace) into a single, cache‑coherent address space. It supplies up to 900 GB/s aggregate bandwidth (450 GB/s each direction), consumes only 1.3 pJ/bit, and tolerates BER 10⁻¹⁵ with a 0.3 UI eye margin—without forward‑error correction. This moves the traditional CPU↔GPU “data copy” bottleneck out of the critical path; instead, locality, coherence traffic, and NUMA effects dominate performance analysis.

Physical‑Layer Fundamentals

- Signaling & Pins – Ground‑referenced NRZ at 40 Gbps per pin; nine data signals form a 45 GB/s lane per direction.

- Bandwidth Scaling – Grace‑Hopper pairs expose ten such lanes, delivering 450 GB/s each way (900 GB/s bidirectional).

- Energy & Area – 1.3 pJ/bit and ~25× energy plus 90× area efficiency versus PCIe 5 PHY.

- Signal Integrity – 0.3 UI horizontal eye‑width at BER 10⁻¹⁵ with no FEC, minimising latency.

Architectural Integration

- Unified Coherent Memory – NVLink‑C2C carries Arm AMBA CHI, allowing line‑granular coherence across CPU L3 and GPU L2.

-

System Topologies

- Grace‑Hopper Superchip: one Grace + one Hopper on a module; CPU sees GPU HBM as an additional NUMA node.

- Grace Superchip: dual Grace CPUs share LPDDR5X pools over C2C, appearing as a 144‑core NUMA system.

Performance Characteristics

| Metric | Peak | Measured (research/benchmarks) | Notes |

|---|---|---|---|

| Bandwidth (H2D) | 450 GB/s | 375 GB/s | Comm‑Scope micro‑benchmarks |

| Bandwidth (D2H) | 450 GB/s | 297 GB/s | Same study |

| CPU→GPU Remote‐HBM Latency | ~800 ns | 800–1000 ns | Memory‑disaggregation eval. |

| GPU Local HBM | ~300 ns | Baseline for comparison |

Effective payload bandwidth is ≈ 672 GB/s total—~75 % of the wire peak—once protocol and software overheads are included.

GH200 Case Study ‘24

It provides an analysis of the memory hierarchy and data movement performance of the Quad GH200 node within the Alps. As workloads in HPC and AI become increasingly limited by memory bottlenecks, optimizing intra-system communication latency and bandwidth has become a critical driver for new architectural developments.

| Component | Description | Key Specifications |

|---|---|---|

| Quad GH200 Node | The basic unit of the system shown in Figure 1, composed of 4 GH200 superchips. | 288 CPU cores, 4 GPUs |

| GH200 Superchip | An integrated chip with a Grace CPU + Hopper GPU. | Each GH200 has 96GB of HBM3 and 128GB of LPDDR5 memory. |

| NVLink-C2C | Connects the CPU and GPU within a single GH200. | 900 GB/s bidirectional bandwidth, cache coherent. |

| NVLink | Connects the Hopper GPUs on different GH200s. | 150 GB/s unidirectional bandwidth. |

| Grace Interconnect | Connects the Grace CPUs on different GH200s. | 150 GB/s bandwidth. |

| Slingshot | Connects the node to the external supercomputer network. | 25 GB/s unidirectional bandwidth (per GH200). |

| NUMA Architecture | The entire node is a system with 8 NUMA domains. | Grace CPU (NUMA 0,1,2,3), Hopper GPU (NUMA 4,12,20,28). |

Core System Architecture

The fundamental building block of the system is the Quad GH200 node, which integrates four Grace Hopper Superchips into a single, cohesive NUMA system.

| Component | Description | Key Features |

|---|---|---|

| Grace Hopper Superchip (GH200) | The basic unit, tightly integrating a Grace CPU (Arm architecture) and a Hopper GPU. | Forms a single, powerful processing unit. |

| NVLink-C2C Interconnect | A high-speed Chip-to-Chip link connecting the Grace CPU and Hopper GPU. | - Bandwidth: 900 GB/s bidirectional. - Cache Coherency: Allows CPU and GPU to share a unified address space and access all main memory. - Advantage: 7x higher bandwidth than traditional PCIe 5.0. |

| Quad GH200 Node | The building block of the Alps supercomputer, consisting of four GH200 Superchips. | - Connectivity: Fully interconnected via NVLink (for GPUs) and Grace Interconnect (GI, for CPUs). - Total Resources: 288 CPU cores and 4 GPUs per node. - System View: Functions as a single NUMA system. |

| Heterogeneous Memory | Each GH200 features two types of memory: LPDDR5 associated with the Grace CPU and HBM3 with the Hopper GPU. | - LPDDR5 (CPU): ~500 GB/s bandwidth. - HBM3 (GPU): >4 TB/s bandwidth. - Implication: The memory pool itself is heterogeneous, making intelligent data placement crucial for performance. |

Key Performance Insights & Micro-benchmarking

The authors used a series of micro-benchmarks to explore the performance of different processing units (PUs) when performing read, write, and copy operations across various physical memory locations.

Read/Write Bandwidth

| Scenario | Observation | Performance Implication |

|---|---|---|

| Hopper GPU accessing Local DDR | Highly efficient, achieving 93% of theoretical bandwidth via the C2C interconnect. | The C2C interconnect provides effective access for the GPU to CPU-attached memory. |

| Grace CPU accessing Local HBM | Less efficient, achieving only 53%-64% of theoretical bandwidth. | Accessing HBM from the CPU is possible but not as performant as the reverse. |

| Concurrent Access (Noise Test) | When CPU and GPU access memory simultaneously, the C2C interconnect becomes a bottleneck. | Performance is significantly impacted, especially for write operations to HBM. |

Memory Copy Performance

| Operation Path | Observation | Limiting Factor |

|---|---|---|

| DDR to DDR (within the same GH200) | The data must traverse the interconnect twice, limiting the effective bandwidth to half of the interconnect’s total bandwidth. | The C2C interconnect bandwidth is the bottleneck. |

| Grace CPU copying to a peer GH200 | Asymmetry in performance was observed. The Grace CPU is more efficient at pushing data from its local memory to a peer GH200 than the reverse operation. | The underlying data path and interconnect topology create performance asymmetries. |

Access Latency & Inter-Processor Communication

| Test / Scenario | Finding | Key Influencing Factor |

|---|---|---|

| Pointer Chase (Cross-C2C access) | Accessing memory across the C2C interconnect (e.g., Hopper accessing DDR) introduces significant latency. | The physical hop across the interconnect is a primary source of delay. |

| Cache Behavior | Hopper’s L2 cache can cache data from HBM. In some cases, accessing cached HBM data on a peer GH200 can be faster than accessing local DDR. | Caching plays a critical role in mitigating latency, sometimes in non-intuitive ways. |

| Ping-Pong Test (Atomic Flags) | Communication latency is extremely sensitive to the physical memory location of the atomic flag. | Latency is lowest when communication happens within a single, local GH200. |

Inter-Node Communication

| Benchmark | Finding | Recommendation |

|---|---|---|

| MPI | To fully saturate the external network bandwidth of a Quad GH200 node, at least four processes must be launched. | Bind each process to a separate network interface to maximize network throughput. |

| NCCL | Data locality is paramount for collective operations like AllReduce. Performance is far superior when using memory on the same GH200 for intra-node communication. | Placing data in the DDR memory of a peer GH200 during inter-node communication will severely degrade overall performance. |

Application Performance Analysis

The study further applied these micro-benchmark insights to real-world, memory-intensive applications.

| Application | Scenario | Performance Impact |

|---|---|---|

| GEMM | All matrix data is stored in local HBM. | The task is compute-bound, achieving high performance. |

| GEMM | Even one input matrix is moved out of local HBM. | Performance drops sharply; the task immediately becomes memory-bound. |

| Llama2 Inference | Model weights are placed in local HBM memory. | Inference time is significantly reduced. |

| Llama2 Inference | Model weights are placed in DDR or peer memory. | Inference time increases substantially, demonstrating the critical role of memory access speed. |

Conclusion

The paper concludes that although the Quad GH200 node is a highly integrated, tightly coupled system, developers must treat it as a collection of four independent superchips interconnected by a high-speed network to achieve optimal performance. The NVLink-C2C technology successfully expands the available memory pool for each processing unit, opening up new possibilities for developing novel heterogeneous applications that mix CPU and GPU computing. However, a deep understanding of the data paths and a meticulous memory layout strategy are indispensable to fully harnessing its potential.